The realm of machine learning and artificial intelligence (AI) is perpetually advancing, pushing the boundaries of what’s possible in technology and robotics. While speculative fiction often muses about a future dominated by AI, the real excitement lies in the tangible progress we’re witnessing today, especially in the field of artificial vision. In this article we look into the core of artificial vision in robotics, highlighting its pivotal role in object tracking—a critical capability for the autonomous systems shaping our future. Below, we explore the key technologies and methodologies that empower robots to perceive and interact with their surroundings.

Table of Contents:

- Introduction to Artificial Vision: Unraveling the fundamentals of artificial vision and its transformative impact on robotics.

- The Importance of Object Tracking in Robotics: Understanding why tracking is critical for robotic systems’ functionality and efficiency.

- Core Technologies Behind Artificial Vision: An in-depth examination of the technologies that serve as the backbone for artificial vision in robotics.

- Photogrammetry: The art and science of obtaining reliable information from photographs.

- Structured Light Imaging: A detailed look at how structured light contributes to precise object recognition.

- Time-of-Flight (ToF): Exploring ToF technology and its role in depth mapping and object detection.

- Laser Triangulation: Understanding the principles of laser triangulation in pinpointing object locations.

- Integration of AI in Object Tracking: How artificial intelligence enhances object tracking capabilities in robotic systems.

- Advancements and Future Prospects: Anticipating the future developments in artificial vision and their potential impact on robotics and beyond.

- Conclusion: Reflecting on the journey of artificial vision in robotics and what lies ahead.

Embark on this exploration with us as we uncover the intricacies of artificial vision in robotics, and envision the future it heralds for our world.

In the heart of innovation: A robot leverages artificial vision to precisely track objects, showcasing the synergy between robotics and cutting-edge technology.

1. Introduction to Artificial Vision

Machine learning and optical recognition technologies are pivotal in advancing sectors such as manufacturing, autonomous vehicles, and the defense industry. Despite the diverse objectives across these fields, the common thread that binds them is the critical need for precise object recognition. Artificial vision enables autonomous systems to not only detect and identify objects but also ‘understand’ their context within the environment. This entails distinguishing objects from their backgrounds and accurately categorizing them, a task that becomes particularly challenging in low-contrast situations. For industrial robots, the stakes are high as they must operate with exceptional precision and, at times, collaborate seamlessly with human operators.

Highlighting the significance of these technologies, a comprehensive review conducted in Spain and published in the journal ‘Sensors’ in 2016, delineated several key techniques underpinning machine vision and robotic guidance. These include photogrammetry, structured light imaging, time-of-flight, and laser triangulation, each offering unique advantages and challenges. The forthcoming sections will delve deeper into these methodologies, shedding light on their principles, applications, and the future they hold for artificial vision in robotics.

2. The Importance of Object Tracking in Robotics

Object tracking stands as a cornerstone in robotics, integral to the advancement of sectors like manufacturing, autonomous vehicles, and defense. This capability enables robots to interact dynamically with their environment, enhancing efficiency, safety, and decision-making processes.

In manufacturing, precise object tracking is essential for quality control and assembly line automation. Robots equipped with this technology can detect defects, manage intricate assembly tasks, and ensure products meet stringent quality standards. The automotive industry, for instance, has seen a 30% increase in production efficiency due to robotics and object tracking.

Autonomous vehicles rely heavily on object tracking for navigation and safety. This technology allows vehicles to interpret traffic conditions, recognize obstacles, and make informed decisions in real-time, significantly reducing the risk of accidents. Studies indicate that autonomous driving technology could reduce traffic accidents by up to 90%.

In the defense sector, object tracking is pivotal for surveillance, reconnaissance, and threat identification. Drones and unmanned systems utilize this technology to monitor areas of interest, track moving targets, and provide critical data, enhancing national security measures.

However, effective object tracking in robotics faces several challenges. High levels of accuracy and real-time processing are paramount, requiring advanced algorithms and substantial computational resources. Environmental factors, such as varying lighting conditions, weather, and occlusions, further complicate tracking accuracy. Additionally, the ethical implications of surveillance and data privacy present ongoing debates in the application of object tracking technologies.

Despite these challenges, continuous advancements in sensor technology, machine learning algorithms, and computational power are pushing the boundaries of what’s possible in robotic object tracking. As these technologies evolve, we can expect even more innovative applications and improved efficiency in robotics-driven industries.

3. Core Technologies Behind Artificial Vision

Delving into the core of artificial vision reveals a suite of sophisticated methods that enable robots to perceive and understand their surroundings. Each technique, from photogrammetry to laser triangulation, offers a unique approach to capturing and interpreting visual data. This section outlines these key methodologies, shedding light on how they function individually and in concert to empower robotic systems with unparalleled object tracking capabilities. As we explore these methods, consider how their specific applications and advantages contribute to the broader landscape of robotics and artificial vision.

3.1. Photogrammetry for Artificial Vision

Photogrammetry, also known as Image-Based Modeling, is a powerful artificial vision technique that captures the essence of objects or scenes through photography. By taking detailed photos from various angles, this method allows for the creation of intricate drawings, three-dimensional models, and precise measurements. The process hinges on identifying specific points within the images and analyzing how these points’ projections intersect, depending on the camera’s location.

In many instances, the accuracy of photogrammetry is enhanced by placing high-contrast markers on the object’s surface, ensuring precise detection. However, when using physical markers is impractical, advanced tracking algorithms can automate and streamline the process. While similar in concept, stereo vision—a technique that gleans information from two different viewpoints—places a lesser emphasis on pinpoint accuracy compared to photogrammetry.

In the realm of robotics, photogrammetry is invaluable for monitoring a robot’s movements with remarkable precision. It’s often integrated with interferometry, creating a hybrid system capable of tracking both the linear and angular positions of robotic components. Although utilizing multiple photogrammetry setups for a single robot offers comprehensive positional data, this approach can be more costly and is typically reserved for calibration purposes rather than continuous tracking.

3.2. Structured Light Imaging

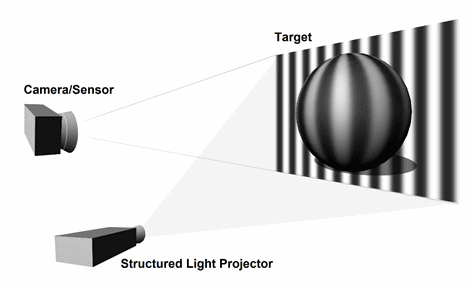

Structured light imaging is renowned for its ability to yield high-resolution outcomes by projecting intricate patterns onto a surface. These patterns, typically modulating in nature, can vary in design, such as the grayscale stripe pattern where each pixel’s intensity oscillates according to a sine wave. This specific approach, known as a time-multiplexing technique, involves capturing the projected pattern with a camera, where the pattern’s distortion reveals the object’s shape due to its interaction with the surface contours.

Graphic describing the structured light technique for processing objects. Courtesy of Robotics Tomorrow.

The utility of the sine wave pattern lies in its predictable phase, enabling the extraction of surface topography from the captured image. The object’s interaction with the pattern, causing distortion or phase shifts, allows for detailed surface mapping. Time-multiplexing necessitates a stationary camera to maintain pattern integrity throughout the imaging process.

Conversely, structured light imaging also encompasses a ‘one-shot’ technique, distinguished by its use of unique, non-repeating patterns. This variation allows for camera mobility, as each segment of the pattern is uniquely coded, correlating to a specific area, or ‘local neighborhood,’ on the surface. A key advantage of structured light imaging, regardless of the technique employed, is its exceptional spatial resolution, facilitating precise and detailed analysis of object surfaces.

3.3. Time-of-Flight Method of Artificial Vision

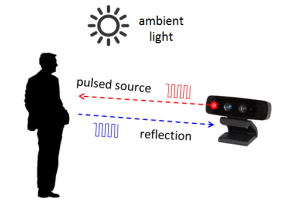

This method of artificial vision uses a modulated light source, typically in the visible or infrared wavelength range. The modulated light source may be in a pattern similar to structured light imaging. Both continuous wave and pulsed light can be used for time-of-flight technology. The light is sent out and reflected back to the source. The difference in phase between the light sent out and the light received makes it possible to reconstruct the scene by determining the time it took for the light to make its trip. Ambient light can sometimes decrease the quality of the detected light, increasing the noise level. Time-of-flight sensors can be used in many ways. One way they are used is to help identify defects in products on assembly lines.

Diagram describing the theory behind time-of-flight. Courtesy of Texas Instruments.

An advantage of time-of-flight that has been identified is that that the sensors are “quite compact and lightweight, and allow real-time 3D acquisition with high frame rate”. This makes time of flight very useful for mobile robots whose movement ranges a span greater than that in an instance where photogrammetry could be used. However, the data from the time-of-flight sensors can be limited by the amount of noise, thus requiring careful calibration and/or postprocessing.

3.4. Laser Triangulation for Artificial Vision

Laser triangulation leverages a precisely positioned laser and camera to pinpoint the location of specific points on an object’s surface. By knowing the exact positions of both the laser and the camera, as well as the distance between them, the system can accurately calculate the spatial coordinates of points across the object. This process involves scanning the object’s surface point by point, building a comprehensive map of its topography.

This method is particularly valuable in industrial settings, where accuracy and precision are paramount. In production and quality control processes, laser triangulation is instrumental. It’s employed in a variety of tasks, from verifying the integrity of vacuum seals on cans to identifying defects on products as they move along a conveyor belt. Its ability to provide precise, real-time measurements makes it a cornerstone technology in automated manufacturing and inspection systems.

4. Integration of AI in Object Tracking

The fusion of Artificial Intelligence (AI) with object tracking has revolutionized the field, offering unprecedented accuracy and efficiency. AI algorithms, particularly those based on machine learning, are adept at parsing complex visual data, making them indispensable for advanced object tracking systems.

AI-enhanced systems excel in recognizing and classifying objects in real-time, even under challenging conditions. These algorithms are trained on vast datasets, enabling them to discern subtle differences and patterns that might elude traditional tracking methods. For instance, in dynamic environments like busy city streets, AI-driven autonomous vehicles can accurately identify and track a multitude of objects, from pedestrians to other vehicles, ensuring safe navigation.

Machine learning models, such as Convolutional Neural Networks (CNNs), have been pivotal in this advancement. They excel in image recognition tasks, accurately classifying objects into predefined categories with high precision. This capability is crucial not only in autonomous vehicles but also in areas like surveillance, where distinguishing between benign and suspicious activities can enhance security measures.

Furthermore, AI’s adaptive learning ability means that these systems continually improve over time. They learn from new data, refining their algorithms to better understand and predict object movements and behaviors. This continuous improvement cycle ensures that AI-integrated tracking systems remain at the cutting edge of technology, pushing the boundaries of what’s possible in robotics and automation.

The integration of AI in object tracking is not just a technical achievement; it’s a transformative shift that is setting new standards for accuracy, efficiency, and reliability in various applications, from industrial automation to autonomous driving and beyond. As AI technology continues to evolve, we can expect even more innovative and effective solutions in object tracking.

5. Advancements and Future Prospects

The landscape of artificial vision is rapidly evolving, with recent innovations heralding a new era of capabilities and applications in robotics. These advancements are not only enhancing existing functionalities but also opening doors to previously unimagined possibilities.

Recent breakthroughs in deep learning and neural networks have significantly improved the accuracy and speed of object recognition and classification, even in complex and dynamic environments. Enhanced sensor technologies, coupled with sophisticated AI algorithms, now allow robots to perceive their surroundings with unprecedented detail and depth.

One notable innovation is the development of 3D vision systems that provide robots with a more nuanced understanding of spatial relationships, enabling more precise navigation and manipulation in three-dimensional space. This has profound implications for industries like logistics and warehousing, where robots equipped with 3D vision are revolutionizing inventory management and order fulfillment processes.

Looking ahead, the integration of quantum computing with artificial vision could exponentially increase processing capabilities, allowing for real-time analysis of vast amounts of visual data. This could significantly enhance autonomous systems’ decision-making processes, making them more reliable and efficient.

Furthermore, the convergence of artificial vision with other emerging technologies like augmented reality (AR) and the Internet of Things (IoT) promises to create new synergies. For instance, in healthcare, robotic systems with advanced artificial vision could perform intricate surgeries with precision beyond human capability, guided by AR overlays.

As these technologies continue to advance, we can anticipate a ripple effect across various sectors. In manufacturing, robots with enhanced vision will drive further automation, increasing efficiency and reducing costs. In urban planning and smart city development, these advancements will contribute to safer and more efficient transportation systems.

6. Conclusion: The Future of Artificial Vision in Robotics

As we peer into the horizon of artificial vision and robotics, the fusion of these disciplines heralds an era of unprecedented advancements. The methods we’ve explored—photogrammetry, structured light imaging, time-of-flight, and laser triangulation—represent foundational pillars in the vast domain of machine learning and optical recognition.

The future promises even deeper integration of AI with robotics, a topic we delve into with our forthcoming article, “AI in Robotics: Exploring Emerging Trends and Future Prospects.” This piece illuminates the dynamic evolution within the field, spotlighting the burgeoning potential for innovation.

Anticipating the years ahead, we envision the emergence of more refined and multifaceted applications of artificial vision across diverse industries. The relentless advancement of these technologies is set to bolster precision, efficiency, and adaptability in increasingly complex scenarios. Imagine machines that, empowered by sophisticated AI algorithms, not only learn from their surroundings but also adeptly adjust to new challenges, paving the way for heightened autonomy.

The symbiosis of AI and robotics also portends significant societal boons—enhancing vehicular safety, refining manufacturing accuracy, and bolstering defense research. Yet, as we navigate this exciting trajectory, the ethical dimensions of such advancements warrant careful consideration, ensuring responsible development and application.

In essence, the odyssey of artificial vision in robotics is a thrilling, ever-unfolding saga. Bolstered by relentless innovation and the expertise of pioneers like DataRay, we stand on the cusp of a new epoch. An era where intelligent machines perceive, comprehend, and engage with the world in ways once confined to the realms of fiction.