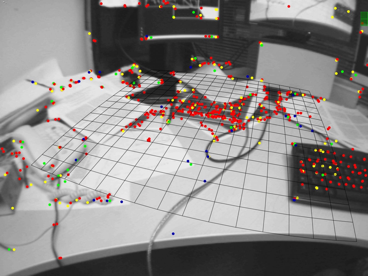

The image presents the map created by visual SLAM, where colored points are map points. Courtesy of Kudan.

Visual SLAM is now a trending approach in autonomous mobile robot development. Mobile robot is the one capable of transporting itself from place to place. For example, the first mobile robot emerged as a smart flying bomb using guiding systems and radar control during World War II. After 80-year investigations, intelligent technologies in autonomous mobile robots are expanding at an exponential rate. They are becoming commercially available at affordable prices. We are entering an era of smart robots.

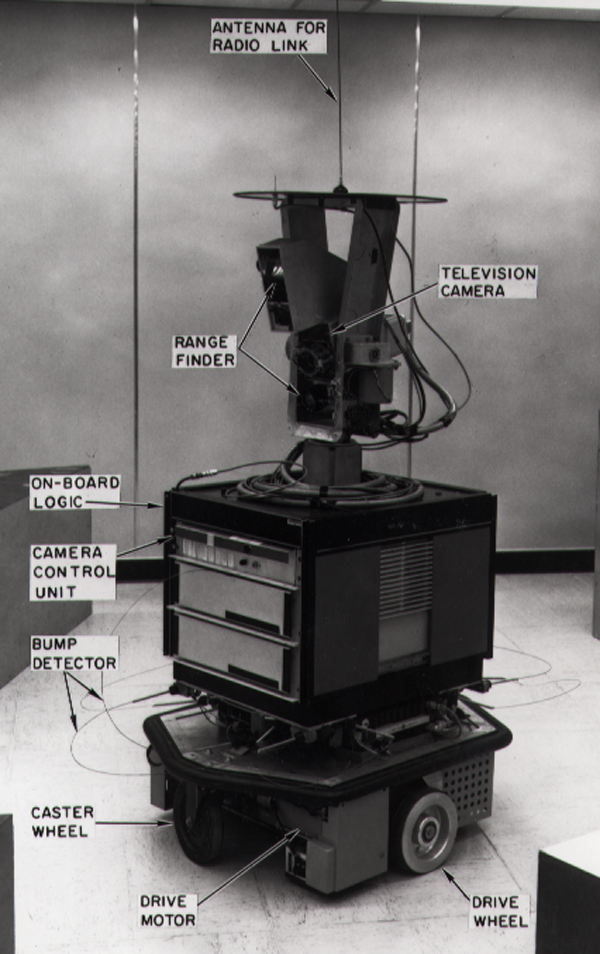

The First Autonomous Mobile Robot

From approximately 1966 to 1972, Stanford Research Institute developed the world’s first mobile, autonomous robot, named Shakey. The primary goal for the project was to investigate all techniques in artificial intelligence. In addition, it was designed to compose a hierarchy of computer programs which would control a mobile automation in a real environment. Before Shakey was published, the previous robots would have to be instructed on each individual step of completing a larger task. As a breakthrough, Shakey was able to perform tasks based on processing sensory information gathered from optical sensors and detectors, to plan the next step ahead and to learn from previous experience. The development of Shakey had a far-reaching impact on the fields of robotics and artificial intelligence.

Shakey Robot. Courtesy of Wikimedia Commons

What is Robot Navigation?

With the advancement of technology in robotic areas, the capability for a mobile robot to accurately avoid obstacles is getting more sophisticated. Robot navigation is the robot’s ability to determine its own position in its reference frame and then to plan a path towards target location. Simultaneous localization and mapping (SLAM) comes in most robot navigation systems in current mobile robots, while it is a set of algorithms working to simultaneously find the position or orientation of sensors on the robot, with respect to its surroundings. Visual SLAM utilizes 2D or 3D vision to perform location and mapping functions when neither the environment nor the location of the sensor is known.

Optical (vision-based) navigation uses computer vision algorithm and optical sensors. It is informative enough to extract the visual features required for the localization in the surrounding environment. Optical sensors can be single beam (1D) or sweeping (2D) laser rangefinders, 3D High Definition LiDAR, 3D Flash LiDAR, or 2D cameras using CCD or CMOS arrays. There has been intense research in visual SLAM because of the increasing ubiquity of cameras in mobile robots. Sensor comparison for SLAM application is widely studied as well.

Compare Between Laser Range Finders and RGB-D Sensors

The high speed and high accuracy of laser range finders make them generate extremely precise distance measurements. Its low cost for robot application also enables them to be significantly popular in industry.

RGB-D depth sensor is a specific type of depth sensing device. It projects structured infrared light working in association with a RGB 3D camera. It has the advantage of being able to recover reliable 3D world structure and 2D image simultaneously, and with the depth channel being largely independent of ambient lighting.

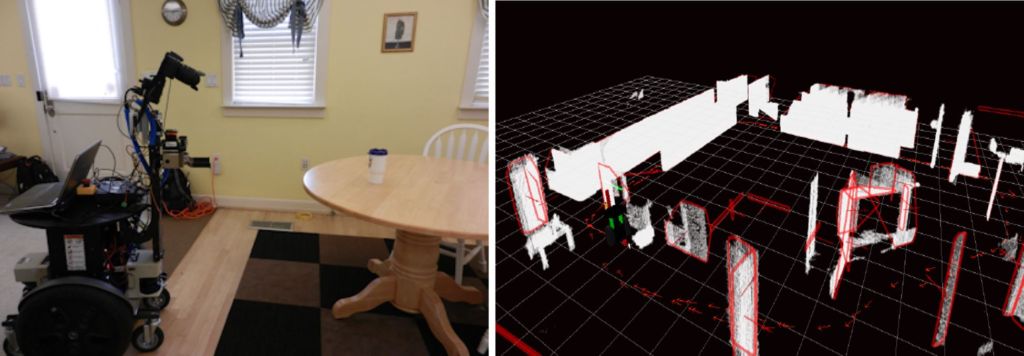

Trevor and his team presented an experiment to evaluate the contribution of 2D laser scanner and 3D point cloud camera in planar surface SLAM. The robot went through different trajectories in three environments: low-clutter, medium-clutter, and high-clutter. Researchers plotted and analyzed the visual features that are measured by a 2D laser scanner and a 3D camera mounted on the robot in each environment.

The visual feature has the same characteristics as the landmark, which is a small region of the image that looks different from its surroundings. The team concluded that the laser scanner cannot extract useful features if it is mounted below the height of obstacles in the environment. In other words, laser scanner has a long range and a wide field of view, but it does not produce 3D information. In contrast, the 3D camera can map planes in any orientation, while it can only map small scale areas.

Left: The robot platform used for the experiment. The sensors are placed on the gripper. Right: A visualization of the map processed by SLAM system in the experiment: planar features, such as table corners, are visible by the red convex hulls and red normal vectors; measurements extracted by point clouds are shown in white; robot ’s trajectories are notated by small red arrows. [1]

How to Get a 3D Image from a 2D Tool?

One of the popular methods of acquiring 3D information from a 2D laser ranger finder is to mount it on a vertically rotating servomotor [2], with scanning angle up to 180 degrees. Although the synchronization of raw scan and the driving of servo motor is necessary for the scanning of 3D environments, the multiple scans to get 3D results will be rather time consuming.

Challenges in Visual SLAM

Visual SLAM has made the autonomous movement possible and inspired many applications. It has been widely studied on ground robots, aerial robots, and underwater robots. However, there are potential challenges for researchers to tackle in this field.

Most mobile robots use Global Positioning System (GPS) sensors which allow acquisition of position information. As we know, GPS signal is not stable and robots’ linkages can slip. The sensors mounted on the robots have probability to receive inaccurate information. As a result, the system will suffer from processing wrong tracking messages.

Several difficulties stemmed from small errors in camera calibration. For example, camera can project incorrect 3D points into planar image. That can produce a wrong mapping result through the algorithm system. Then, it will lead to a failure for the robot in trajectory planning.

Conclusion

Above all, various SLAM applications require multiple types of sensors appropriate for the unique environment the robot will encounter. Special properties in each sensor determine the SLAM approach in mobile robot application. Proper sensor selection is important as it affects the quality of the environment information available to the robot. Researchers are making huge effort on sensor technology development to make it weigh lighter and cheaper. With high-quality sensors, visual SLAM technology will be the most practical one in mobile robotics applications.

References

[1] A. J. Trevor, J. Rogers, H. I. Christensen, “Planar surface SLAM with 3D and 2D sensors”, Robotics and Automation (ICRA), 2012.

[2] O. Wulf, B. Wagner. “Fast 3d scanning methods for laser measurement systems”, International conference on control systems and computer science (CSCS14), pages 2–5, 2003.